Table of Contents

When we think of the future of automobiles, it doesn’t take long for the concept of autonomous vehicles to come up. Autonomous vehicles (otherwise known as self-driving vehicles, driverless vehicles, or robotaxis) have been positioned by their manufacturers as one of the most advanced, convenient, and safest forms of transportation. While there may be truth to some of these assertions, our Los Angeles car accident lawyers find the method and speed at which autonomous vehicles are being developed, tested, and manufactured careless and negligent.

The bottom line is that while some data suggests that self-driving cars may be safer than traditional automobiles, they are far from being perfect. What’s worse is that they fail, they do so unexpectedly and in unpredictable ways. Regardless of where you stand on the debate, as more and more autonomous vehicles appear on California roads, it’s important to know what to do if you’re injured by one and who to hold responsible. For more information, contact a Los Angeles car accident lawyer at MKP Law Group, LLP today for a free consultation.

Let’s Talk About Zoox

Zoox is an autonomous vehicle company that is owned by Amazon. The company has offered fully driverless public transport services since 2018, and unlike other types of driverless vehicles, Zoox’s fleets are designed from scratch to function with minimal or no human input.

In the limited time that Zoox has been available to the general public (exclusively in California and Nevada) there have been approximately 75 accidents. Of these, there were six reported accidents that resulted in injuries. In May 2024, a driverless Zoox was involved in two separate rear-end accidents that injured at least two motorcyclists. In these incidents, the Zoox vehicle braked unexpectedly with insufficient time for the motorcyclists to avoid collision, according to Reuters.

As a result of these incidents, the National Highway Traffic Safety Administration (NHTSA) launched an investigation into the ability of Zoox’s technology to read the trajectory of other vehicles.

A year later, another incident occurred in Las Vegas. In April of 2025, a Zoox vehicle collided with a car after it approached the lane where the Zoox was operating, though no injuries resulted. In light of these incidents, Zoox voluntarily recalled 270 vehicles to apply a software update. After the company claimed that the issue was resolved, the NHTSA closed its investigation, and service resumed in Las Vegas.

Is Zoox Safer Than Waymo?

It’s hard to know. While driverless transportation services have been around for nearly a decade, there is still limited information on the true causes of autonomous vehicle accidents and how frequently they occur. Many autonomous vehicle companies have been successful at shielding their safety reports, even when implored to make them public by government entities such as the DMV.

Another important piece of the puzzle is the number of vehicles in some companies’ fleets. For example, we know that Zoox has at least a few hundred vehicles in its fleet, but without knowing the total number of vehicles, we can’t determine an accident rate percentage.

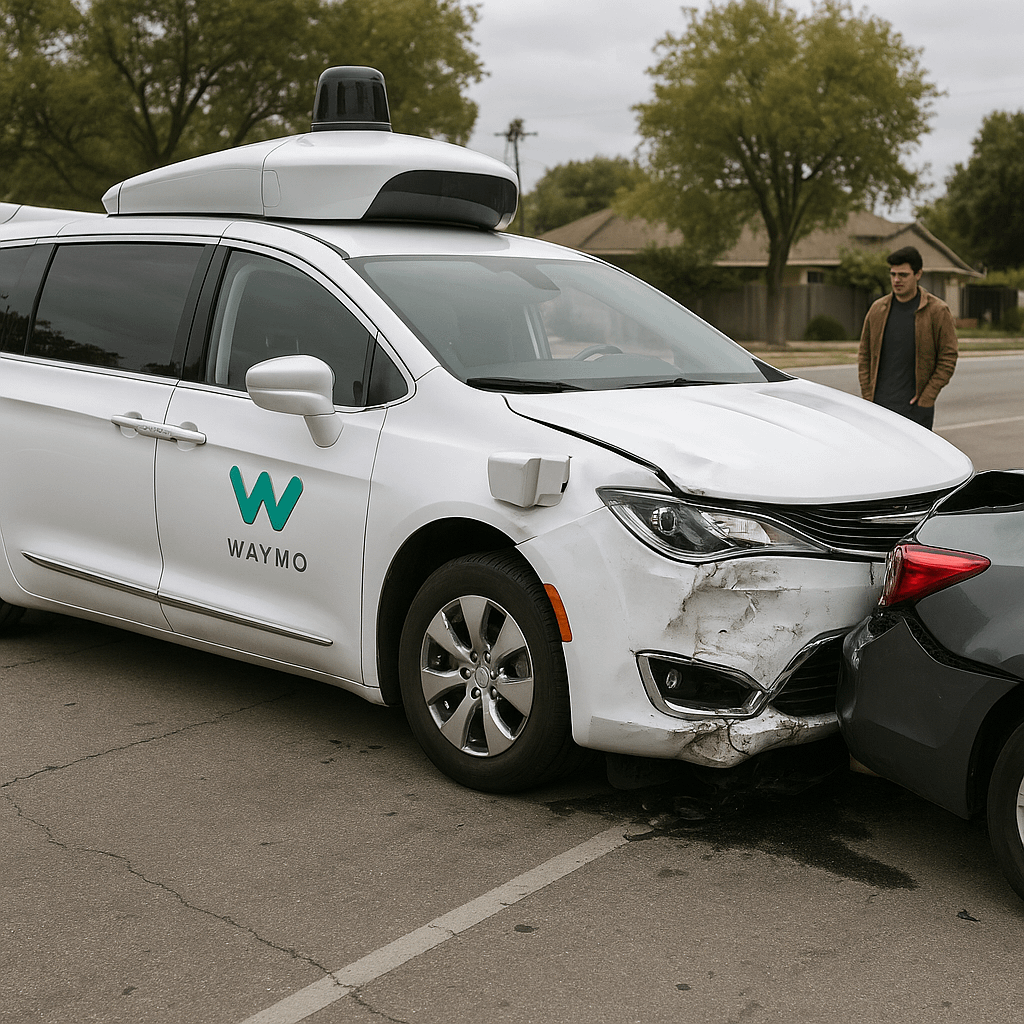

Based on the total number of publicly known accidents, Waymo has been involved in far more incidents and injuries than Zoox. As of 2024, the NHTSA reported that there had been nearly 700 confirmed accidents involving a Waymo vehicle. According to the same report, these injuries resulted in nearly 50 injuries. Unlike Zoox, there have also been two reported deaths — one human in 2025 and one canine in 2023, though the details are still being investigated.

What Happens If I’m Injured While Traveling in an Autonomous Vehicle?

In many ways, liability in autonomous vehicle accidents mirrors that of other types of car accidents. But there are certainly some variances. Determining which party may be liable depends on the circumstances of the accident. Here are a few possible examples:

When an Autonomous Vehicle is Hit by Another Car

If the autonomous car was driving in a safe and orderly manner, following all speed limits and traffic regulations and it was hit by another driver, the driver of the other vehicle would likely be at fault. However, as we’ve learned, many autonomous vehicles struggle to recognize the trajectory of other vehicles and have been known to accelerate, brake, and turn erratically. If this happens, the manufacturer of the autonomous vehicle may be held liable, depending on the involvement of the driver in the other vehicle. As California is a comparative negligence state, it is even possible that both the manufacturer and the driver of the other vehicle may be required to pay damages.

When an Autonomous Vehicle Hits Another Car, Person, or Object

In this scenario, it’s likely that the driverless vehicle manufacturer will be held at least partly liable, especially if there were no other at-fault parties. Furthermore, while there is some unconfirmed data that suggests that autonomous vehicles have a lower crash rate than regular vehicles, it also suggests that they are more likely to unexpectedly hit pedestrians, cyclists, and random objects such as guard rails due to faulty software, which would likely be covered under the manufacturer’s insurance.

Other Scenarios

Apart from standard car crashes, autonomous vehicles have also been known to misdirect their passengers and leave them stranded in unsafe locations due to faulty navigational software. This has also created scenarios where passengers are trapped inside the vehicle while it redirects. If a person is stranded or trapped in an unsafe location and is injured or harmed as a result, they may be able to file a personal injury claim for compensation for medical bills or pain and suffering.

The Elephant in the Room

So, what is the real issue behind driverless vehicles, and why do these types of issues surface again and again? One commonly overlooked aspect of the autonomous vehicle industry is that it often mirrors other types of technological races we’ve seen in recent history, such as the Dot-Com bubble, social media algorithms, or AI advancement. In each of these examples, the tech leaders of the time all rushed to quickly develop (and profit from) their new specific brand of the same technology, while failing to do so responsibly. The autonomous car industry is no different, and in fact, the largest companies within the industry include Google (which owns Waymo), Amazon (which owns Zoox), and Tesla (which is scheduled to release its brand, Robotaxi, this year). What is different is that this technology is directly responsible for transporting passengers safely from point A to Point B and if it fails, human lives are directly at stake.

It’s also worth noting that the motivation for developing their respective brands of driverless cars goes beyond transportation. Amazon, whose success relies on its delivery services, and Waymo, which has already entered into contracts with some of the largest trucking companies in the country, both have millions on the line that hinge on their fast release. Unfortunately, they also rely on the unsuspecting public to test their products for them.

The so-called innovation happening in the driverless vehicle sector isn’t being driven solely by public benefit or safer roads; it’s being driven by the need to dominate logistics, capture delivery markets, and slash labor costs. And while these companies push their vehicles into more cities, often under limited regulatory oversight, pedestrians, cyclists, and passengers become real-world beta testers.

Who Faces the Biggest Risk of Injury From Waymo and Zoox Autonomous Vehicles?

Perhaps the most ethically-challenging aspect of self-driving vehicles is that some of the safety data regarding sensors seems to suggest that they’re better at detecting some groups over others. To make matters worse, the pedestrians that they struggle to detect are typically marginalized. According to studies by the University of Illinois, Georgia Tech, and King’s College, the most vulnerable groups are:

Pedestrians and cyclists: Autonomous vehicles failed to detect approximately 7% of pedestrians as a whole, and in one study, failed to recognize 5 out of 15 cyclists crossing at an intersection. Furthermore, missed detection rates spiked to 64% during poor weather or nighttime conditions.

Children: Autonomous vehicles fail to recognize child pedestrians by a significant amount. The technology, in its current form, detects adults at a 20% higher frequency than children.

People with darker skin: Fairer-skinned individuals are 7.5% more likely to be detected than individuals with darker skin tones.

Animals: While the data is limited on the ability of autonomous vehicles to recognize animals, one of the studies suggests that animals, particularly flying animals and mammals, and specifically bats, deer, and squirrels are at risk. There has been at least one report of a dog killed by an autonomous vehicle. This is not only a threat to the animals involved, but also to the passengers who may be injured by broken windshields, airbags, or sudden stops.

Our Los Angeles autonomous vehicle injury attorneys can help

As we’ve addressed throughout this guide, the primary challenge of fully understanding the safety risks involved with riding in a Waymo, Zoox, or other robotaxi is that safety reports are closely guarded by the companies themselves. As these services become more and more available, it’s important to know your legal options in the event that you or someone you love is injured or killed in an autonomous vehicle accident. To learn more, call or contact MKP Law Group, LLP today for a free consultation with a Los Angeles AV accident lawyer today.

Trusted Content

Trusted Content